Linear algebra classes often jump straight to the definition of a projector (as a matrix) when talking about orthogonal projections in linear spaces. As often as it happens, it is not clear how that definition arises. This is what is covered in this post.

Orthogonal projection: how to build a projector

Case 1 – 2D projection over (1,0)

It is quite straightforward to understand that orthogonal projection over (1,0) can be practically achieved by zeroing out the second component of any 2D vector, at last if the vector is expressed with respect to the canonical basis ![]() . Albeit an idiotic statement, it is worth restating: the orthogonal projection of a 2D vector amounts to its first component alone.

. Albeit an idiotic statement, it is worth restating: the orthogonal projection of a 2D vector amounts to its first component alone.

How can this be put math-wise? Since we know that the dot product evaluates the similarity between two vectors, we can use that to extract the first component of a vector ![]() . Once we have the magnitude of the first component, we only need to multiply that by

. Once we have the magnitude of the first component, we only need to multiply that by ![]() itself, to know how much in the direction of

itself, to know how much in the direction of ![]() we need to go. For example, starting from

we need to go. For example, starting from ![]() , first we get the first component as

, first we get the first component as ![]() ; then we multiply this value by e_1 itself:

; then we multiply this value by e_1 itself: ![]() . This is in fact the orthogonal projection of the original vector. Writing down the operations we did in sequence, with proper transposing, we get

. This is in fact the orthogonal projection of the original vector. Writing down the operations we did in sequence, with proper transposing, we get

![]()

One simple and yet useful fact is that when we project a vector, its norm must not increase. This should be intuitive: the projection process either takes information away from a vector (as in the case above), or rephrases what is already there. In any way, it certainly does not add any. We may rephrase our opening fact with the following proposition:

PROP 1: ![]()

This is can easily be seen through the pitagorean theorem (and in fact only holds for orthogonal projection, not oblique):

![]()

Case 2 – 2D projection over (1,1)

Attempt to apply the same technique with a random projection target, however, does not seem to work. Suppose we want to project over ![]() . Repeating what we did above for a test vector

. Repeating what we did above for a test vector ![]() , we would get

, we would get

![]()

This violates the previously discovered fact the norm of the projection should be ![]() than the original norm, so it must be wrong. In fact, visual inspection reveals that the correct orthogonal projection of

than the original norm, so it must be wrong. In fact, visual inspection reveals that the correct orthogonal projection of ![]() is

is ![]() .

.

The caveat here is that the vector onto which we project must have norm 1. This is vital every time we care about the direction of something, but not its magnitude, such as in this case. Normalizing ![]() yields

yields ![]() . Projecting

. Projecting ![]() over

over ![]() is obtained through

is obtained through

![Rendered by QuickLaTeX.com \[\begin{bmatrix} \frac{1}{\sqrt 2} \\ \frac{1}{\sqrt 2} \end{bmatrix} ([3, 0] \begin{bmatrix} \frac{1}{\sqrt 2} \\ \frac{1}{\sqrt 2} \end{bmatrix}) = [\frac{3}{2}, \frac{3}{2}],\]](https://quickmathintuitions.org/wp-content/ql-cache/quicklatex.com-d90f71eb5d5c7a050d239ea3900e39e7_l3.png)

which now is indeed correct!

PROP 2: The vector on which we project must be a unit vector (i.e. a norm 1 vector).

Case3 – 3D projection on a plane

A good thing to think about is what happens when we want to project on more than one vector. For example, what happens if we project a point in 3D space onto a plane? The ideas is pretty much the same, and the technicalities amount to stacking in a matrix the vectors that span the place onto which to project.

Suppose we want to project the vector ![]() onto the place spanned by

onto the place spanned by ![]() . The steps are the same: we still need to know how much similar

. The steps are the same: we still need to know how much similar ![]() is with respect to the other two individual vectors, and then to magnify those similarities in the respective directions.

is with respect to the other two individual vectors, and then to magnify those similarities in the respective directions.

![Rendered by QuickLaTeX.com \[\begin{bmatrix} 1 & 0 \\ 0 & 1 \\ 0 & 0 \end{bmatrix} \begin{bmatrix} 1 & 0 & 0\\ 0 & 1 & 0 \end{bmatrix} \begin{bmatrix} 5 \\ 7 \\ 9 \end{bmatrix} = \begin{bmatrix} 1 & 0 \\ 0 & 1 \\ 0 & 0 \end{bmatrix} \begin{bmatrix} 5 \\ 7 \end{bmatrix} = 5 \begin{bmatrix} 1 \\ 0 \\ 0 \end{bmatrix} + 7 \begin{bmatrix} 0 \\ 1 \\ 0 \end{bmatrix} = \begin{bmatrix} 5 \\ 7 \\ 0 \end{bmatrix}\]](https://quickmathintuitions.org/wp-content/ql-cache/quicklatex.com-262d02aeb7268b77c63282670c050e10_l3.png)

The only difference with the previous cases being that vectors onto which to project are put together in matrix form, in a shape in which the operations we end up making are the same as we did for the single vector cases.

The rise of the projector

As we have seen, the projection of a vector ![]() over a set of orthonormal vectors

over a set of orthonormal vectors ![]() is obtained as

is obtained as

![]()

And up to now, we have always done first the last product ![]() , taking advantage of associativity. It should come as no surprise that we can also do it the other way around: first

, taking advantage of associativity. It should come as no surprise that we can also do it the other way around: first ![]() and then afterwards multiply the result by

and then afterwards multiply the result by ![]() . This

. This ![]() makes up the projection matrix. However, the idea is much more understandable when written in this expanded form, as it shows the process which leads to the projector.

makes up the projection matrix. However, the idea is much more understandable when written in this expanded form, as it shows the process which leads to the projector.

THOREM 1: The projection of ![]() over an orthonormal basis

over an orthonormal basis ![]() is

is

![]()

So here it is: take any basis of whatever linear space, make it orthonormal, stack it in a matrix, multiply it by itself transposed, and you get a matrix whose action will be to drop any vector from any higher dimensional space onto itself. Neat.

Projector matrix properties

- The norm of the projected vector is less than or equal to the norm of the original vector.

- A projection matrix is idempotent: once projected, further projections don’t do anything else. This, in fact, is the only requirement that defined a projector. The other fundamental property we had asked during the previous example, i.e. that the projection basis is orthonormal, is a consequence of this. This is the definition you find in textbooks: that

. However, if the projection is orthogonal, as we have assumed up to now, then we must also have

. However, if the projection is orthogonal, as we have assumed up to now, then we must also have  .

. - The eigenvalues of a projector are only 1 and 0. For an eigenvalue

,

,

![Rendered by QuickLaTeX.com \[\lambda v = Pv = P^2v = \lambda Pv = \lambda^2 v \Rightarrow \lambda = \lambda^2 \Rightarrow \lambda = \{0,1\}\]](https://quickmathintuitions.org/wp-content/ql-cache/quicklatex.com-58d9d8249cfbe642e596e957cf86ea84_l3.png)

- It exists a basis

of

of  such that it is possible to write

such that it is possible to write  as

as ![Rendered by QuickLaTeX.com P = [I_k \ 0_{n-k}]](https://quickmathintuitions.org/wp-content/ql-cache/quicklatex.com-1e5d44f7d6f4ccfc5b529b7af91355ff_l3.png) , with

, with  being the rank of

being the rank of  . If we further decompose

. If we further decompose ![Rendered by QuickLaTeX.com X = [X_1, X_2]](https://quickmathintuitions.org/wp-content/ql-cache/quicklatex.com-086450685a1788c3f4c55f9793f99e3c_l3.png) , with

, with  being

being  and

and  being

being  , the existence of the basis

, the existence of the basis  shows that

shows that  really sends points from

really sends points from  into

into  and points from

and points from  into

into  . It also shows that

. It also shows that  .

.

Model Order Reduction

Is there any application of projection matrices to applied math? Indeed.

It is often the case (or, at least, the hope) that the solution to a differential problem lies in a low-dimensional subspace of the full solution space. If some ![]() is the solution to the Ordinary Differential Equation

is the solution to the Ordinary Differential Equation

![]()

then there is hope that there exists some subspace ![]() , s.t.

, s.t. ![]() in which the solution lives. If that is the case, we may rewrite it as

in which the solution lives. If that is the case, we may rewrite it as

![]()

for some appropriate coefficients ![]() , which are the components of

, which are the components of ![]() over the basis

over the basis ![]() .

.

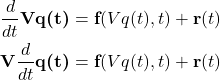

Assuming that the base ![]() itself is time-invariant, and that in general

itself is time-invariant, and that in general ![]() will be a good but not perfect approximation of the real solution, the original differential problem can be rewritten as:

will be a good but not perfect approximation of the real solution, the original differential problem can be rewritten as:

where ![]() is an error.

is an error.